“GPU for rendering just hit a new level with RTX 5090, and Pictor Network brings it closer than ever with a decentralized GPU network.”

NVIDIA GeForce RTX 5090: A New Rendering Powerhouse

NVIDIA’s RTX 5090 is here, and it’s already shifting the conversation across the 3D rendering space.

From the outside, it looks like just another generation upgrade. But for those who push pixels for a living — 3D artists, VFX studios, architects, and real-time creators — this card represents something bigger. More than raw specs, it’s a statement: GPU rendering is entering a new era of scale, realism, and speed.

But while the 5090 may redefine what’s possible, it also raises a familiar question:

How do we access this kind of power affordably, flexibly, and when we actually need it?

Let’s unpack both sides of that story.

1. What Makes a Great Rendering GPU?

3D rendering isn’t about frame rates. It’s about how efficiently your GPU handles complex scenes. Geometry, textures, lighting passes, and ray tracing all hit the card at once. The right GPU clears that load and keeps things moving.

Here’s what actually matters:

- CUDA Cores

The core of most render engines. CUDA handles ray tracing, shading, and sampling in engines like Octane, Redshift, and Blender Cycles. The more CUDA cores, the more compute you can parallelize and the faster your renders. - RT Cores

These specialize in accelerating ray traversal and intersection tests. For engines that support them (Cycles with OptiX, Unreal, Omniverse), RT cores offload heavy ray calculations and boost path tracing performance. - VRAM

Your entire scene (textures, geometry, caches, AOVs) lives in VRAM. If you run out, your render may crash or fall back to slow system memory. For high-end production, 24 GB+ is often a baseline. - Memory Bandwidth & Architecture

Wide memory buses and fast GDDR6X or GDDR7 help move data quickly across the GPU. Modern architectures also include large L2 caches to avoid bottlenecks during heavy frame processing. - Tensor Cores

Primarily designed for AI tasks, but increasingly useful in rendering. Engines that support AI denoising (like Blender’s OptiX or Redshift’s denoisers) use Tensor cores to clean up noise faster, meaning you can render fewer samples for the same quality.

Ultimately, a great GPU for rendering combines raw compute, memory headroom, architectural efficiency, and smart acceleration features all tuned to meet the demands of modern 3D production.

And that brings us to NVIDIA’s upcoming flagship.

With more cores, more VRAM, faster memory bandwidth, and an all-new architecture, the RTX 5090 is expected to raise the ceiling for what a GPU for 3D rendering can deliver.

Let’s take a closer look at what we know so far.

2. RTX 5090 Specs Overview

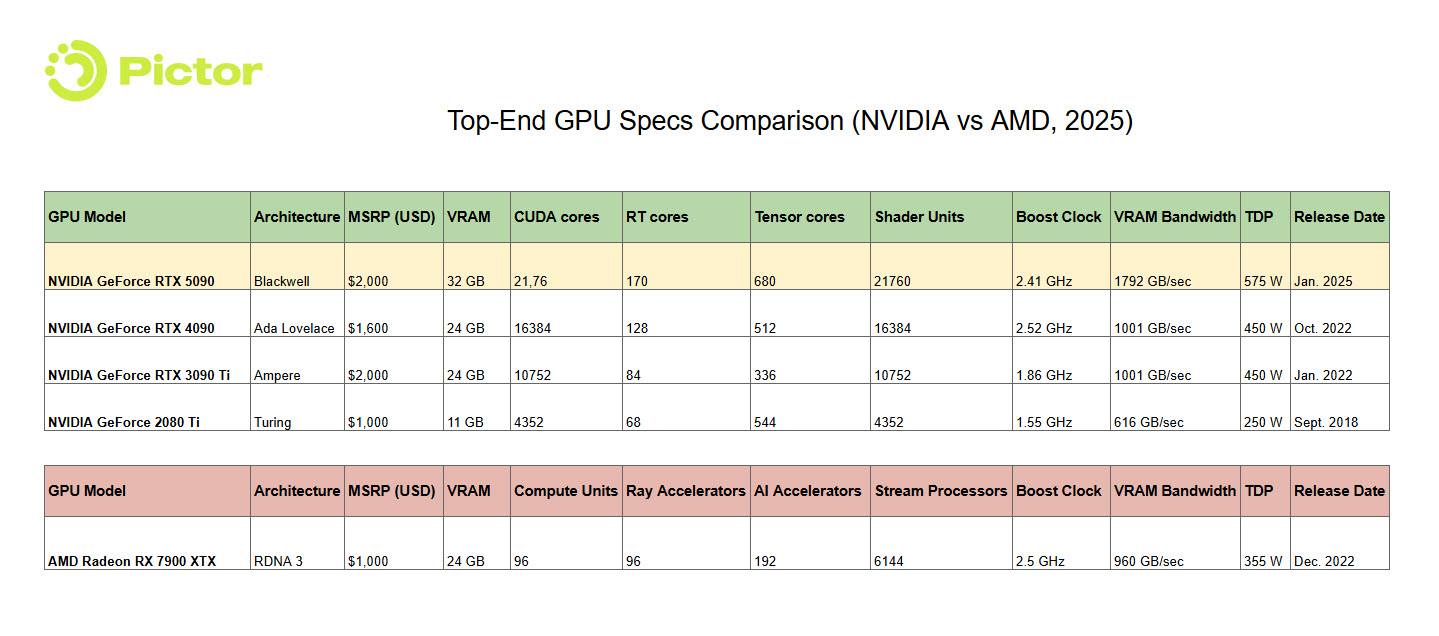

Since this article focuses on the top-tier RTX 5090, we’ll be comparing it to other top-end GPUs from recent generations, both from NVIDIA and AMD. That includes:

- RTX 4090 (Ada Lovelace)

- RTX 3090 Ti (Ampere)

- RTX 2080 Ti (Turing)

- AMD RX 7900 XTX (AMD’s most powerful RDNA3 GPU)

Top-End GPU Comparison Table

Below is a quick specs comparison to give us context before we move into real-world rendering performance.

Top-End GPU Specs Comparison (NVIDIA vs AMD, 2025)

As is typical for NVIDIA, the company has once again expanded the boundaries of desktop GPU design, introducing substantial increases in VRAM, CUDA cores, RT cores, Tensor cores, VRAM bandwidth, and TDP over the previous-generation RTX 4090.

Comparing the top-end SKUs from NVIDIA’s last three generations highlights just how substantial these upgrades are:

- Compared to RTX 2080 Ti: The RTX 5090 features nearly five times the CUDA cores of the RTX 2080 Ti, close to triple the VRAM and VRAM bandwidth, and more than twice the TDP.

- Even against the RTX 4090, the 5090 brings meaningful upgrades, most notably in the memory subsystem, with an additional 8 GB of VRAM (bringing the total to 32 GB) and a significantly improved bandwidth of 1.792 Terabytes per second.

With a $2,000 price tag, the RTX 5090 is a serious investment, but NVIDIA appears intent on delivering performance to match.

But Specs Aren’t Everything…

Hardware specs tell us what a GPU should be capable of. But what really matters is how it performs inside real render engines.

Let’s move from theory to practice and see how these GPUs stack up in Blender and V-Ray when it’s time to hit “Render.”

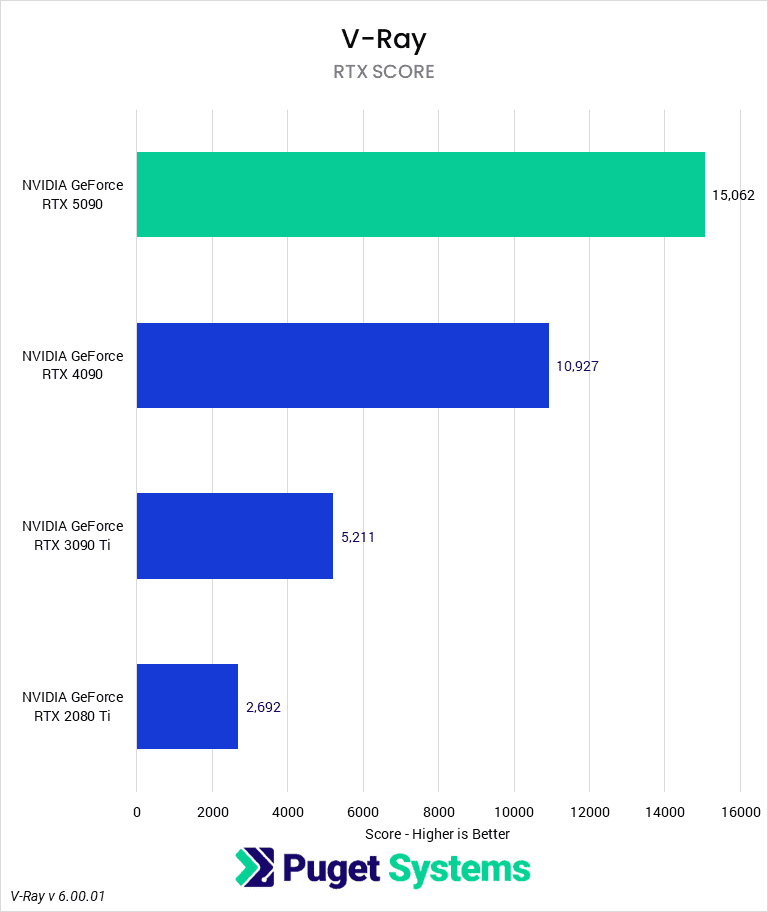

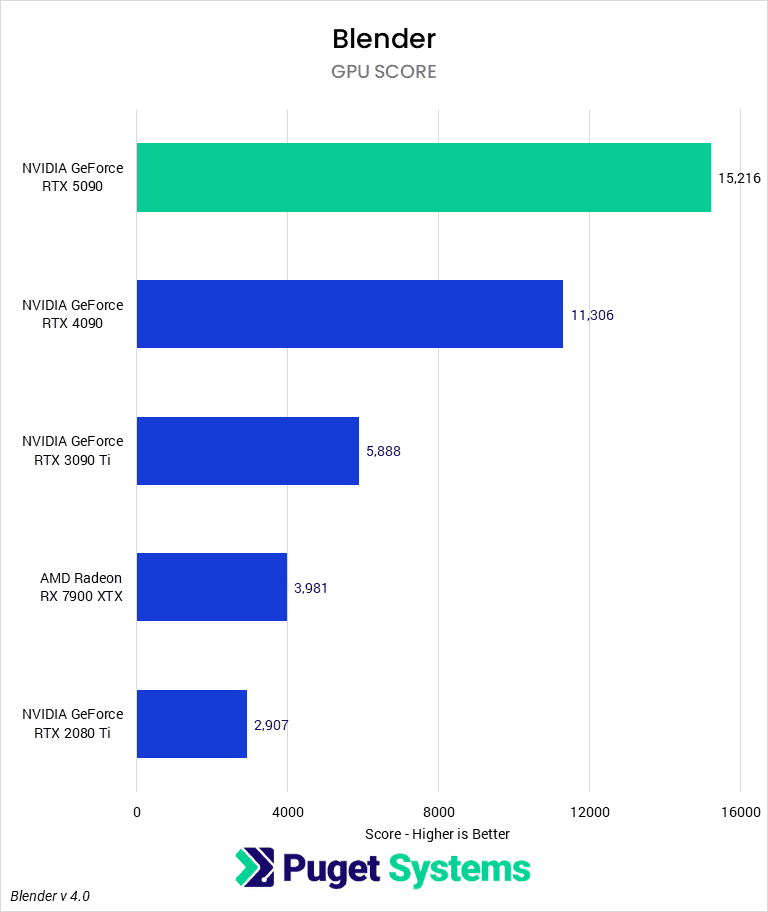

3. Real-World Rendering Performance (V-Ray & Blender)

Specs are great, but real performance is what counts. To evaluate how the RTX 5090 performs as a GPU for rendering, we turn to early benchmarks from Puget Systems using two widely used render engines: V-Ray and Blender.

Due to limited early driver and software support, Octanebench and Redshift (Cinebench) were not compatible with the RTX 5090 at the time of testing. There’s also a known issue with CUDA rendering in V-Ray, which currently affects performance in some scenes. NVIDIA is likely to patch this, but it’s a reminder that early adoption of any new GPU for rendering can come with edge-case limitations.

Still, where support is in place, the performance uplift is undeniable.

Source: Puget Systems

- In V-Ray RTX rendering, the RTX 5090 is 38% faster than the 4090, and nearly 3× faster than the 3090 Ti. The 5090 commands a $400 higher price than the 4090, but its 38% performance boost in V-Ray makes it a worthwhile upgrade for rendering professionals.

- In Blender GPU rendering, it delivers a 35% performance boost over the 4090, making it one of the most efficient upgrades available for production scenes.

In practice, this means faster turnarounds, more real-time feedback, and greater flexibility, all things that matter when your GPU for rendering isn’t just a component, but the core of your creative workflow.

4. The Catch: Power Like This Doesn’t Come Cheap

There’s no denying it. NVIDIA’s RTX 5090 is a monster of a GPU for rendering, pairing best-in-class performance with a next-gen feature set. But that power comes at a cost.

With a $2,000 MSRP and likely higher once AIB models and market demand kick in, this isn’t a casual upgrade. Add to that the need for a high-wattage power supply, better cooling, and possibly a new motherboard, and you’re looking at a full workstation overhaul just to support the card.

And that’s if you can even get one. Limited launch supply, scalping, and pre-order surges will likely keep the RTX 5090 out of reach for most creators, especially freelancers or small teams who only need high-end GPU power occasionally.

5. Rethinking GPU for rendering Access: Use an RTX 5090 Without Buying One

Here’s the thing: even those who buy the RTX 5090 won’t be using it 24/7.

Studios, render farms, and pro users often have powerful GPUs sitting idle overnight, between projects, or during slow weeks. Instead of letting that hardware go unused, Pictor Network connects idle GPUs into a decentralized, global rendering network.

When one creator’s machine is idle, another can use its power — remotely, securely, and on demand. Through this model, you don’t have to own a 5090 to use one. You just need access to the network that connects you to it.

And if you do own one? You can contribute your idle time to the network and earn passively from global rendering workloads, with no middlemen, no centralized data centers, just compute flowing directly where it’s needed.

In a world where GPU for rendering is more essential and expensive than ever, access becomes the new edge. Pictor Network isn’t just changing how we render, we are changing who gets to render at this level.

Whether you want to rent RTX-class power or put your GPU to work, Pictor Network connects creators to compute and GPUs to earnings.

👉 Learn more: pictor.network

💬 Join the community: X | Telegram | Discord | LinkedIn | YouTube | Medium | Substack