“Everything 3D creators should know to render smarter and make the most of the GPU for Rendering.”

1. Introduction: The GPU is the Workhorse of 3D Rendering

Once your modeling, lighting, rigging, and simulation are done, one step remains: rendering. And when it comes to render speed, stability, and quality, nothing impacts the outcome more than your GPU.

But with various options ranging from NVIDIA to AMD out there, how do you decide which GPU is right for your workflow? And once you own one, what if it’s not always enough, or worse, sitting idle most of the time?

This guide covers:

- The key GPU specs that affect rendering

- A breakdown of NVIDIA vs AMD GPU for rendering use cases

- A new way to access or monetize GPU power with a decentralized GPU network

2. Understand the GPU Specs: What Really Affects Render Performance

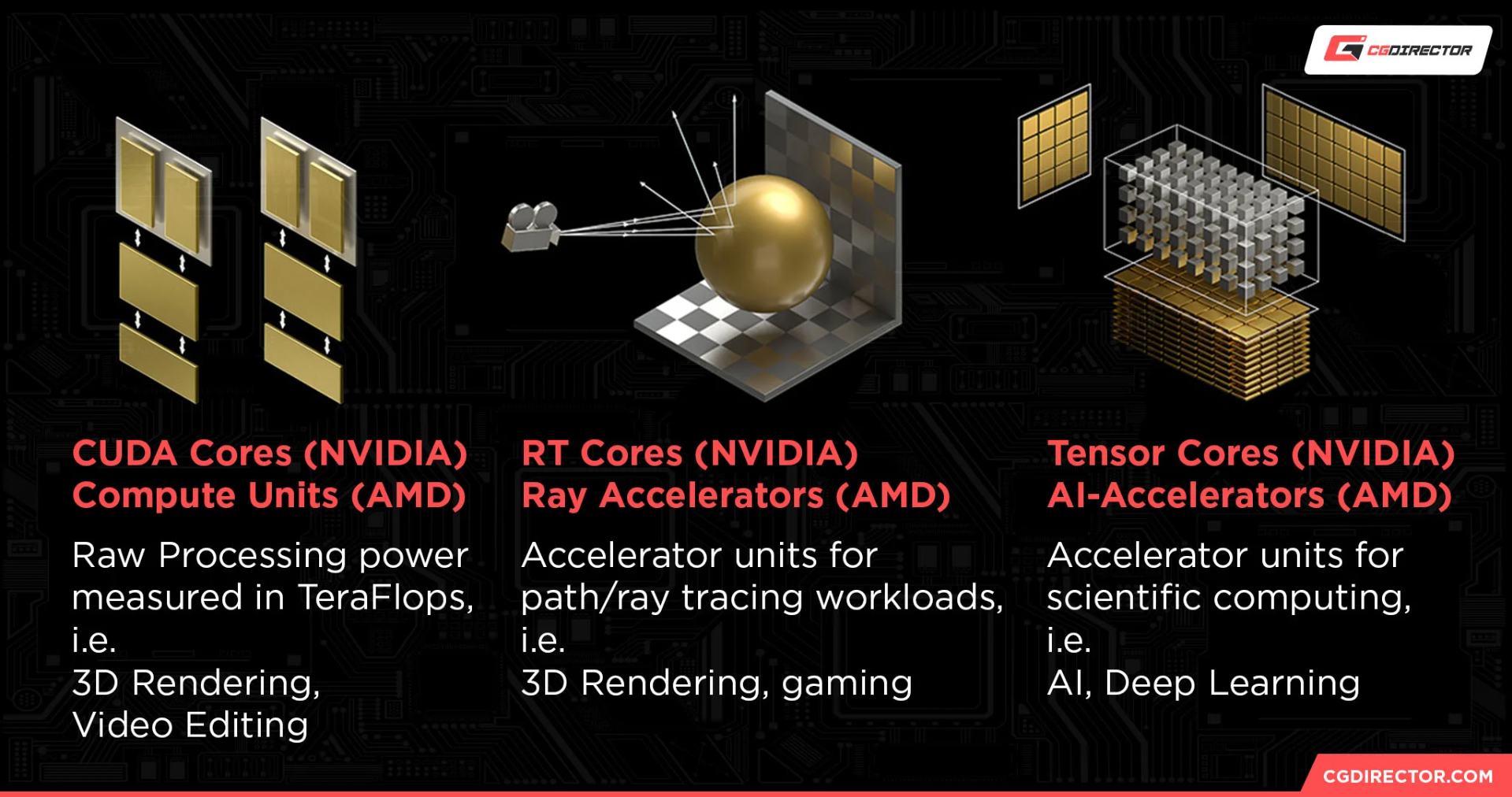

Source: CG Director

Below are the core GPU specs and how they relate to real-world rendering workloads. Familiarizing yourself with these specs is essential before evaluating or purchasing a GPU for rendering:

- CUDA Cores (NVIDIA) | Compute Units (AMD): These define the raw processing power of a GPU. More cores generally mean better performance for GPU-accelerated 3D rendering.

- Tensor Cores (NVIDIA) | AI Accelerators (AMD): Specialized cores for AI and deep learning tasks. While mainly used for DL/AI, they can also accelerate features like denoising in some render engines.

- RT Cores (NVIDIA) | Ray Accelerators (AMD): Dedicated to ray tracing. These boost ray tracing performance in supported engines like Cycles, Redshift, and Arnold.

- VRAM: Determines how much scene data your GPU can hold in memory. More VRAM allows handling larger scenes without slowdown or crashes. 8GB is the minimum, but 12–24GB is recommended for modern workloads.

- GPU Clock: Refers to the core speed of the GPU. It can help compare cards within the same architecture. Higher GPU clocks on the same GPU (via overclocking) may improve render performance in certain scenarios.

3. NVIDIA GPU: The Industry Standard GPU for 3D Rendering

NVIDIA remains the gold standard for 3D professionals. It’s not just because of raw power, but due to its deeply integrated ecosystem across the entire rendering workflow.

If budget is not an issue, the RTX 4090 remains one of the most powerful and reliable GPUs for rendering workflows today. With ample VRAM, advanced cooling, and industry-leading CUDA and RT Core performance, it handles virtually any production-level workload with ease. For those exploring next-gen options, the RTX 5090 (if available) may push performance even further, though with significantly higher cost and limited availability at launch. But even below that tier, NVIDIA GPUs consistently offer advantages for asset creation, simulation, baking, and real-time rendering.

Why NVIDIA?

- CUDA & OptiX: Core technologies used across industry-standard engines including Octane, Redshift, Arnold, and V-Ray. Without CUDA, many workflows simply don’t function.

- Faster Ray Tracing: RTX cards offer the best-in-class RT Core acceleration for physically accurate lighting, shadowing, and reflections.

- AI Features: DLSS, AI denoising, and OptiX accelerate iterative workflows and viewport responsiveness.

- Superior Baking: Applications like Substance 3D Painter and Designer only support GPU map baking via NVIDIA hardware.

- Simulation Workflows: Tools like Marvelous Designer and UV Packmaster 3 run significantly faster with CUDA support.

Recommended NVIDIA Cards

- RTX 4070 SUPER / 4070 Ti: Strong choice for modeling, shading, and light-to-mid rendering tasks.

- RTX 4080 SUPER / 4090: Ideal for cinematic projects, AI-assisted pipelines, and real-time ray tracing in production.

- RTX 5090: For bleeding-edge workflows where cost is no object, the RTX 5090 (if available) offers next-generation performance across ray tracing, AI acceleration, and massive VRAM capacity.

If you’re doing client work, working under deadlines, or using advanced render engines across multiple DCCs, NVIDIA is not just a preference, it’s a requirement.

4. AMD GPU: Competitive for Blender and Cost-Sensitive Workflows

Source: Wccftech

AMD GPUs have historically lagged behind in 3D workflows, largely due to missing support for CUDA and weaker ray tracing performance. However, recent improvements, particularly in Blender, have made AMD a viable option for specific creators.

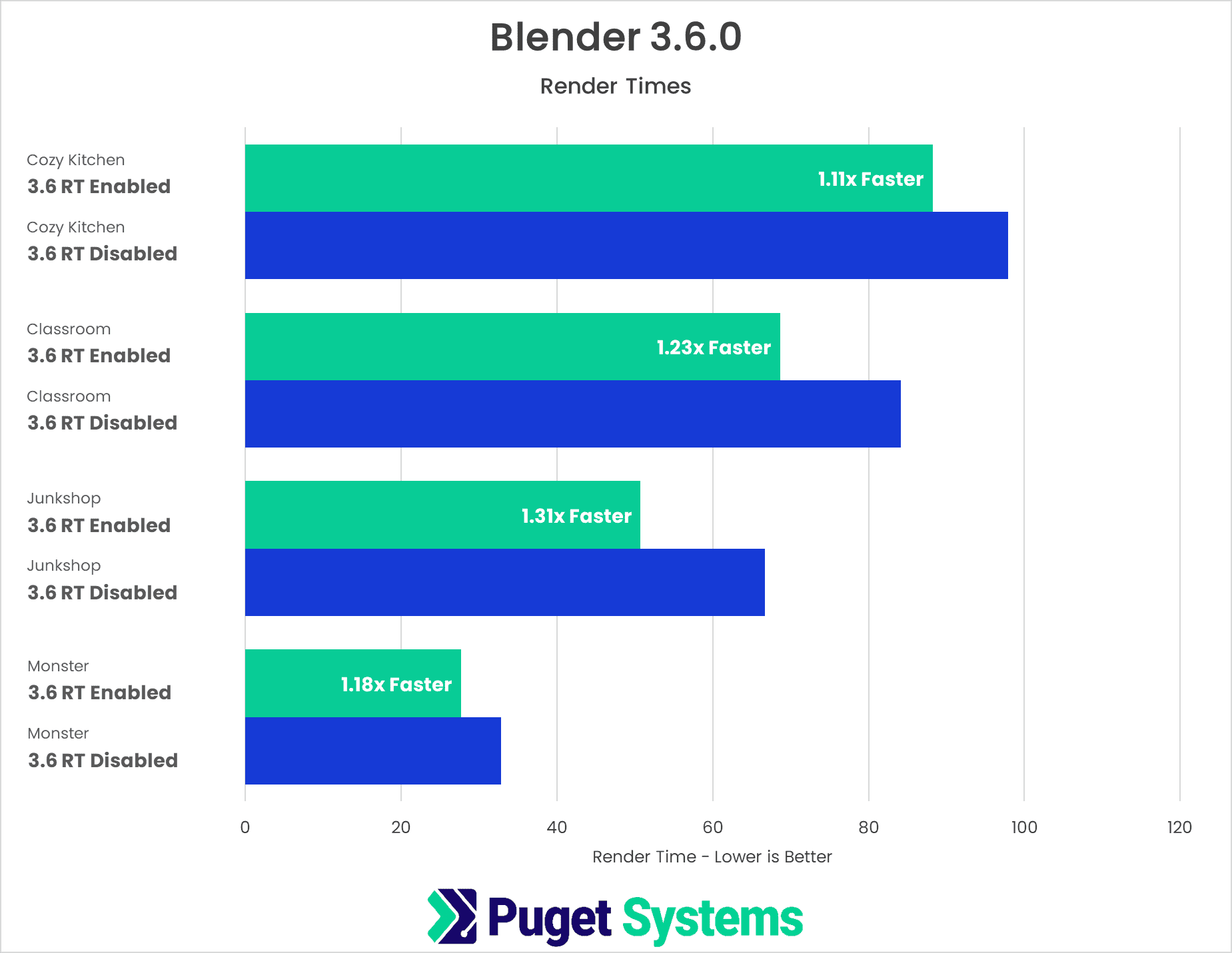

With HIP and HIP-RT integration in Blender 3.6+, AMD GPUs like the RX 7900 XTX now deliver solid ray tracing performance, closing a major performance gap with NVIDIA.

Where AMD Works Well

- Blender-Centric Workflows: HIP-RT now enables faster Cycles rendering with AMD cards.

- Cost-Conscious Builds: You can often get more VRAM per dollar vs NVIDIA (e.g., 16–24GB at a lower price point).

- Raster-Based Tools: Tools like Substance 3D Painter use OpenGL rather than CUDA or OptiX for most operations, meaning AMD is viable if VRAM is sufficient.

Limitations to Be Aware Of

- Baking & Simulation: Tools like Marvelous Designer, Substance Painter (GPU baking), and UV Packmaster still depend on CUDA. AMD is not supported or is significantly slower.

- Ray Tracing Speed: AMD Ray Accelerators are slower than NVIDIA’s RT Cores in nearly all benchmarks.

- Pipeline Compatibility: Some plugins and tools simply don’t work (or underperform) without NVIDIA-specific acceleration.

AMD is a compelling choice for hobbyists, Blender users, or artists who prioritize viewport work and raster-based texturing, but for most production use cases, NVIDIA still leads the pack.

AMD GPUs have long been considered second-class citizens in 3D rendering, but that’s changing fast. With the release of Blender 3.6 and the adoption of HIP and HIP-RT, AMD cards like the RX 7900 XTX have shown significant performance improvements. In fact, Puget Systems’ benchmarks show notable ray tracing gains using HIP-RT in Cycles.

Source: Puget Systems

If your workflow is Blender-focused and cost-sensitive, AMD is now a very real contender.

Pros & Cons

- Pros: Great price-to-performance ratio, high VRAM, improving support

- Cons: Limited compatibility with some render engines, less predictable stability

5. AMD vs NVIDIA: Which Is Right for You?

If you’re wondering whether AMD or NVIDIA is the better fit for your rendering workflow, the answer often comes down to software support and production reliability.

Source: exIT Technologies

NVIDIA is widely considered the industry leader for 3D rendering, and for good reason. CUDA Cores remain the most broadly supported hardware for GPU acceleration across commercial render engines. Many 3D applications either require CUDA or perform noticeably better with it. From Blender Cycles with OptiX, to Redshift, Octane, Arnold, and V-Ray, the support ecosystem around NVIDIA is mature and optimized.

That said, AMD has come a long way, especially in Blender. The integration of HIP and HIP-RT has made AMD GPUs viable for Cycles rendering, with Puget Systems’ benchmarks showing significant improvements starting with version 3.6 and the RX 7900 XTX. AMD also offers excellent price-to-performance value and high VRAM counts, which matter greatly for large scenes and texture-heavy workflows.

So, which to choose?

- For commercial production, multi-software pipelines, and time-sensitive projects, NVIDIA is the safe and supported path.

- For Blender-centric workflows, personal projects, and value-focused builds: AMD can be a cost-effective and capable alternative.

6. A Great GPU Isn’t Always Enough, but Sometimes, It’s Too Much

You’ve built the perfect rig. But when it’s time to render a high-resolution animation with volumetrics, glass, and 8K textures, your local GPU still takes hours and the deadline is ticking.

Other times, your powerful GPU is doing… nothing. It sits idle overnight. It’s underused during modeling. It’s waiting while you prep the next scene.

This is the paradox of modern 3D work:

- You either don’t have enough power when you need it most

- Or you have more power than you can use when you don’t need it

Buying more GPUs isn’t always practical. And letting existing power sit idle is a missed opportunity. The smart solution is that it’s not just owning GPUs, it’s accessing them on demand, and sharing them when you don’t.

7. Pictor Network: A Smarter Way to Use and Share GPU Power

Pictor Network is a decentralized GPU network that connects people with rendering needs to those with unused GPU capacity.

If You’re Rendering:

- Tap into a global pool of GPUs when your local machine can’t keep up

- Scale up for big jobs without investing in expensive new hardware

- Get predictable performance with decentralized job distribution and on-chain result validation

If You Own a GPU:

- Let your GPU earn for you when you’re not using it

- Contribute power to the network and receive rewards

- Support both NVIDIA and AMD cards, no need for exotic setups

Whether you’re a solo creator, part of a small studio, or just someone with a high-end card at home, Pictor Network lets you participate in a smarter, more flexible GPU ecosystem.

Pictor Network is a decentralized GPU network that connects 3D creators with idle GPU owners worldwide.

- Need more power? Rent GPUs from the network to accelerate heavy renders.

- Have an idle GPU? Contribute it to the network and earn crypto rewards.

- Supports both NVIDIA and AMD cards

Final Thoughts

In 2025, it’s not just about choosing the right GPU, it’s about choosing the right way to use it. Whether your hardware needs backup during crunch time or you’re looking to put idle power to work, the most efficient approach is no longer about ownership.

It’s about flexibility. Collaboration. And making the most of what you already have.

So, don’t just buy the best GPU; connect to the network that makes it work harder.

👉 Explore Pictor Network: https://pictor.network

💬 Join Pictor Network community: X | Telegram | Discord | LinkedIn | YouTube | Medium | Substack